Alexander Waibel

Lombard Speech Synthesis for Any Voice with Controllable Style Embeddings

Jan 19, 2026Abstract:The Lombard effect plays a key role in natural communication, particularly in noisy environments or when addressing hearing-impaired listeners. We present a controllable text-to-speech (TTS) system capable of synthesizing Lombard speech for any speaker without requiring explicit Lombard data during training. Our approach leverages style embeddings learned from a large, prosodically diverse dataset and analyzes their correlation with Lombard attributes using principal component analysis (PCA). By shifting the relevant PCA components, we manipulate the style embeddings and incorporate them into our TTS model to generate speech at desired Lombard levels. Evaluations demonstrate that our method preserves naturalness and speaker identity, enhances intelligibility under noise, and provides fine-grained control over prosody, offering a robust solution for controllable Lombard TTS for any speaker.

Paragraph Segmentation Revisited: Towards a Standard Task for Structuring Speech

Dec 30, 2025Abstract:Automatic speech transcripts are often delivered as unstructured word streams that impede readability and repurposing. We recast paragraph segmentation as the missing structuring step and fill three gaps at the intersection of speech processing and text segmentation. First, we establish TEDPara (human-annotated TED talks) and YTSegPara (YouTube videos with synthetic labels) as the first benchmarks for the paragraph segmentation task. The benchmarks focus on the underexplored speech domain, where paragraph segmentation has traditionally not been part of post-processing, while also contributing to the wider text segmentation field, which still lacks robust and naturalistic benchmarks. Second, we propose a constrained-decoding formulation that lets large language models insert paragraph breaks while preserving the original transcript, enabling faithful, sentence-aligned evaluation. Third, we show that a compact model (MiniSeg) attains state-of-the-art accuracy and, when extended hierarchically, jointly predicts chapters and paragraphs with minimal computational cost. Together, our resources and methods establish paragraph segmentation as a standardized, practical task in speech processing.

Shared Latent Representation for Joint Text-to-Audio-Visual Synthesis

Nov 07, 2025Abstract:We propose a text-to-talking-face synthesis framework leveraging latent speech representations from HierSpeech++. A Text-to-Vec module generates Wav2Vec2 embeddings from text, which jointly condition speech and face generation. To handle distribution shifts between clean and TTS-predicted features, we adopt a two-stage training: pretraining on Wav2Vec2 embeddings and finetuning on TTS outputs. This enables tight audio-visual alignment, preserves speaker identity, and produces natural, expressive speech and synchronized facial motion without ground-truth audio at inference. Experiments show that conditioning on TTS-predicted latent features outperforms cascaded pipelines, improving both lip-sync and visual realism.

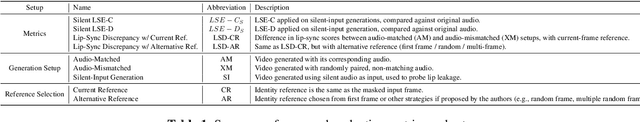

Assessing Identity Leakage in Talking Face Generation: Metrics and Evaluation Framework

Nov 05, 2025

Abstract:Inpainting-based talking face generation aims to preserve video details such as pose, lighting, and gestures while modifying only lip motion, often using an identity reference image to maintain speaker consistency. However, this mechanism can introduce lip leaking, where generated lips are influenced by the reference image rather than solely by the driving audio. Such leakage is difficult to detect with standard metrics and conventional test setup. To address this, we propose a systematic evaluation methodology to analyze and quantify lip leakage. Our framework employs three complementary test setups: silent-input generation, mismatched audio-video pairing, and matched audio-video synthesis. We also introduce derived metrics including lip-sync discrepancy and silent-audio-based lip-sync scores. In addition, we study how different identity reference selections affect leakage, providing insights into reference design. The proposed methodology is model-agnostic and establishes a more reliable benchmark for future research in talking face generation.

CAPE: A CLIP-Aware Pointing Ensemble of Complementary Heatmap Cues for Embodied Reference Understanding

Jul 29, 2025Abstract:We address the problem of Embodied Reference Understanding, which involves predicting the object that a person in the scene is referring to through both pointing gesture and language. Accurately identifying the referent requires multimodal understanding: integrating textual instructions, visual pointing, and scene context. However, existing methods often struggle to effectively leverage visual clues for disambiguation. We also observe that, while the referent is often aligned with the head-to-fingertip line, it occasionally aligns more closely with the wrist-to-fingertip line. Therefore, relying on a single line assumption can be overly simplistic and may lead to suboptimal performance. To address this, we propose a dual-model framework, where one model learns from the head-to-fingertip direction and the other from the wrist-to-fingertip direction. We further introduce a Gaussian ray heatmap representation of these lines and use them as input to provide a strong supervisory signal that encourages the model to better attend to pointing cues. To combine the strengths of both models, we present the CLIP-Aware Pointing Ensemble module, which performs a hybrid ensemble based on CLIP features. Additionally, we propose an object center prediction head as an auxiliary task to further enhance referent localization. We validate our approach through extensive experiments and analysis on the benchmark YouRefIt dataset, achieving an improvement of approximately 4 mAP at the 0.25 IoU threshold.

Mask-Free Audio-driven Talking Face Generation for Enhanced Visual Quality and Identity Preservation

Jul 28, 2025Abstract:Audio-Driven Talking Face Generation aims at generating realistic videos of talking faces, focusing on accurate audio-lip synchronization without deteriorating any identity-related visual details. Recent state-of-the-art methods are based on inpainting, meaning that the lower half of the input face is masked, and the model fills the masked region by generating lips aligned with the given audio. Hence, to preserve identity-related visual details from the lower half, these approaches additionally require an unmasked identity reference image randomly selected from the same video. However, this common masking strategy suffers from (1) information loss in the input faces, significantly affecting the networks' ability to preserve visual quality and identity details, (2) variation between identity reference and input image degrading reconstruction performance, and (3) the identity reference negatively impacting the model, causing unintended copying of elements unaligned with the audio. To address these issues, we propose a mask-free talking face generation approach while maintaining the 2D-based face editing task. Instead of masking the lower half, we transform the input images to have closed mouths, using a two-step landmark-based approach trained in an unpaired manner. Subsequently, we provide these edited but unmasked faces to a lip adaptation model alongside the audio to generate appropriate lip movements. Thus, our approach needs neither masked input images nor identity reference images. We conduct experiments on the benchmark LRS2 and HDTF datasets and perform various ablation studies to validate our contributions.

Towards Better Disentanglement in Non-Autoregressive Zero-Shot Expressive Voice Conversion

Jun 04, 2025Abstract:Expressive voice conversion aims to transfer both speaker identity and expressive attributes from a target speech to a given source speech. In this work, we improve over a self-supervised, non-autoregressive framework with a conditional variational autoencoder, focusing on reducing source timbre leakage and improving linguistic-acoustic disentanglement for better style transfer. To minimize style leakage, we use multilingual discrete speech units for content representation and reinforce embeddings with augmentation-based similarity loss and mix-style layer normalization. To enhance expressivity transfer, we incorporate local F0 information via cross-attention and extract style embeddings enriched with global pitch and energy features. Experiments show our model outperforms baselines in emotion and speaker similarity, demonstrating superior style adaptation and reduced source style leakage.

KIT's Low-resource Speech Translation Systems for IWSLT2025: System Enhancement with Synthetic Data and Model Regularization

May 26, 2025Abstract:This paper presents KIT's submissions to the IWSLT 2025 low-resource track. We develop both cascaded systems, consisting of Automatic Speech Recognition (ASR) and Machine Translation (MT) models, and end-to-end (E2E) Speech Translation (ST) systems for three language pairs: Bemba, North Levantine Arabic, and Tunisian Arabic into English. Building upon pre-trained models, we fine-tune our systems with different strategies to utilize resources efficiently. This study further explores system enhancement with synthetic data and model regularization. Specifically, we investigate MT-augmented ST by generating translations from ASR data using MT models. For North Levantine, which lacks parallel ST training data, a system trained solely on synthetic data slightly surpasses the cascaded system trained on real data. We also explore augmentation using text-to-speech models by generating synthetic speech from MT data, demonstrating the benefits of synthetic data in improving both ASR and ST performance for Bemba. Additionally, we apply intra-distillation to enhance model performance. Our experiments show that this approach consistently improves results across ASR, MT, and ST tasks, as well as across different pre-trained models. Finally, we apply Minimum Bayes Risk decoding to combine the cascaded and end-to-end systems, achieving an improvement of approximately 1.5 BLEU points.

KIT's Offline Speech Translation and Instruction Following Submission for IWSLT 2025

May 19, 2025

Abstract:The scope of the International Workshop on Spoken Language Translation (IWSLT) has recently broadened beyond traditional Speech Translation (ST) to encompass a wider array of tasks, including Speech Question Answering and Summarization. This shift is partly driven by the growing capabilities of modern systems, particularly with the success of Large Language Models (LLMs). In this paper, we present the Karlsruhe Institute of Technology's submissions for the Offline ST and Instruction Following (IF) tracks, where we leverage LLMs to enhance performance across all tasks. For the Offline ST track, we propose a pipeline that employs multiple automatic speech recognition systems, whose outputs are fused using an LLM with document-level context. This is followed by a two-step translation process, incorporating additional refinement step to improve translation quality. For the IF track, we develop an end-to-end model that integrates a speech encoder with an LLM to perform a wide range of instruction-following tasks. We complement it with a final document-level refinement stage to further enhance output quality by using contextual information.

The AI Co-Ethnographer: How Far Can Automation Take Qualitative Research?

Apr 21, 2025

Abstract:Qualitative research often involves labor-intensive processes that are difficult to scale while preserving analytical depth. This paper introduces The AI Co-Ethnographer (AICoE), a novel end-to-end pipeline developed for qualitative research and designed to move beyond the limitations of simply automating code assignments, offering a more integrated approach. AICoE organizes the entire process, encompassing open coding, code consolidation, code application, and even pattern discovery, leading to a comprehensive analysis of qualitative data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge